Shared Hierarchical Representations Explain Temporal Correspondence Between Brain Activity and Deep Neural Networks

Shared Hierarchical Representations Explain Temporal Correspondence Between Brain Activity and Deep Neural Networks

Lutzow Holm, E.; Marrafini, G.; Fernandez Slezak, D.; Tagliazucchi, E.

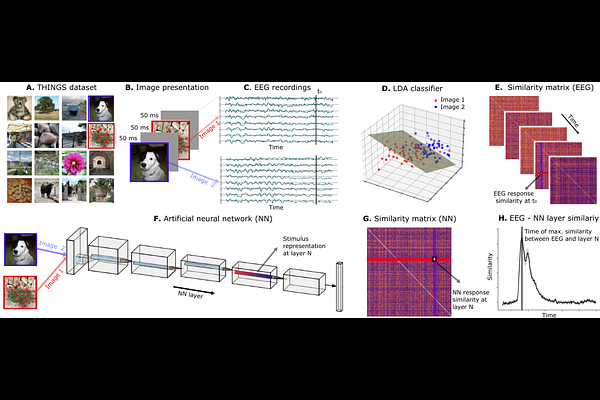

AbstractThe visual cortex and artificial neural networks both process images hierarchically, progressing from low-level features to high-level semantic representations. We investigated the temporal correspondence between activations from multiple convolutional and transformer-based neural network models (AlexNet, MoCo, ResNet-50, VGG-19, and ViT) and human EEG responses recorded during visual perception tasks. Leveraging two EEG datasets of images presented at different durations, we assessed whether this correspondence reflects general architectural principles or model-specific computations. Our analysis revealed a robust mapping: early EEG components correlated with activations from initial network layers and low-level visual features, whereas later components aligned with deeper layers and semantic content. Moreover, for images presented during longer times, the extent of correspondence correlated with the semantic contribution to the EEG response. These findings highlight a consistent temporal alignment between biological and artificial vision, suggesting that this correspondence is primarily driven by the hierarchical transformation of visual to semantic representations rather than by idiosyncratic computational features of individual network architectures.