Modulation of decodable semantic features of brain activity via selection attention

Modulation of decodable semantic features of brain activity via selection attention

Okuno, A.; Yamaguchi, H. Q.; Nishimoto, S.; Nakai, T.

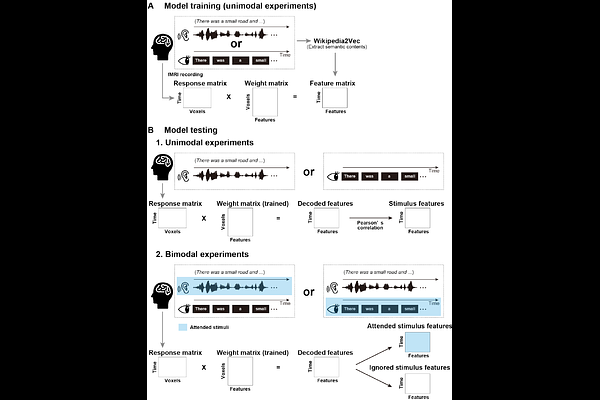

AbstractWe frequently encounter linguistic information in multiple modalities, such as text and speech, simultaneously. In such cases, we understand the information by selectively attending to one of the modalities. Previous research has shown that selective attention to a specific stimulus modality modulates cortical activity patterns. However, it remains unclear which aspects of linguistic information are selectively modulated by attention and how such modulation influences the decodability of semantic content. To address this question, we constructed decoding models of latent semantic features from the functional magnetic resonance imaging data of six participants in both unimodal (either visual or auditory) and bimodal conditions (simultaneously visual and auditory, with participants attending to a single modality at a time). In unimodal conditions, we successfully decoded the semantic contents from the brain activity. Decodable features were consistent across modalities in both intra-modal and cross-modal decoding. For bimodal conditions, decoding accuracies for the attended stimuli were higher than for the ignored when training and test stimuli belonged to the same modality. Furthermore, decodable features were more consistent across modalities with attended than ignored stimuli in both intra-modal and cross-modal decoding. These results indicate common decodable semantic features regardless of the presentation modality and that selective attention enhances the semantic representations contributing to such decodability.