Using computer vision to quantify facial expressions of children with autism during naturalistic social interactions

Using computer vision to quantify facial expressions of children with autism during naturalistic social interactions

Manelis, L.; Barami, T.; Ilan, M.; Meiri, G.; Menashe, I.; Soskin, E.; Sofer, C.; Dinstein, I.

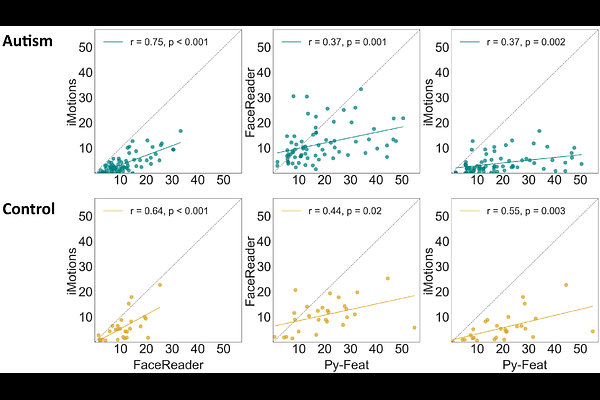

AbstractBackground: Difficulties with non-verbal communication, including atypical use of facial expressions, are a core feature of autism. Quantifying atypical use of facial expressions during naturalistic social interactions in a reliable, objective, and direct manner is difficult, but potentially possible with facial analysis computer vision algorithms that identify facial expressions in video recordings. Methods: We analyzed >5 million video frames from 100 verbal children, 2-7-years-old (72 with autism and 28 controls), who were recorded during a ~45-minute ADOS-2 assessment using modules 2 or 3, where they interacted with a clinician. Three different facial analysis algorithms (iMotions, FaceReader, and Py-Feat) were used to identify the presence of six facial expressions (anger, fear, sadness, surprise, disgust, and happiness) in each video frame. We then compared results across algorithms and across autism and control groups using ANCOVA analyses while controlling for the age and sex of participating children. Results: There were significant differences in the performance of the three facial analysis algorithms including differences in the proportion of frames identified as containing a face and frames classified as containing each of the examined facial expressions. Nevertheless, analyses across all three algorithms demonstrated that there were no significant differences in the quantity of facial expressions produced by children with autism and controls. Limitations: Our study was limited to verbal children with autism who completed ADOS-2 assessments using modules 2 and 3 and were able to sit in a stable manner while facing a wall mounted camera. In addition, this study focused on comparing the quantity of facial expressions across groups rather than their quality, timing, or social context. Conclusions: Commonly used automated facial analysis algorithms exhibit large variability in their output when identifying facial expressions of young children during naturalistic social interactions. Nonetheless, all three algorithms did not identify differences in the quantity of facial expressions across groups, suggesting that atypical production of facial expressions in verbal children with autism is likely related to their quality, timing, and social context rather than their quantity during natural social interaction.