Enabling Real-Time Fluctuation-Based Super ResolutionImaging

Enabling Real-Time Fluctuation-Based Super ResolutionImaging

Komen, J.; Tekpinar, M.; Huo, R.; De Zwaan, K.; Tomen, N.; Grussmayer, K.

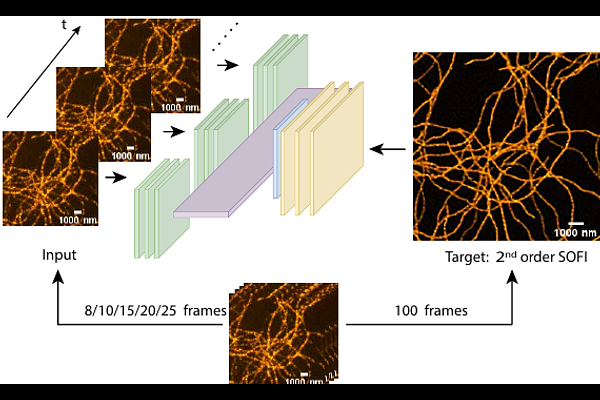

AbstractLive-cell imaging captures dynamic cellular processes, but many structures remain beyond the diffraction limit. Fluctuation based super-resolution techniques reach beyond the diffraction limit by exploiting correlations in fluorescence blinking. However, the methods require the acquisition of hundreds of frames and involve computationally intensive post-processing, which can take tens of seconds, thereby limiting the suitability for real-time sub-diffraction imaging of fast cellular events. To address this, we use the recursive neural network model termed MISRGRU, which integrates sequential low-resolution frames to extract spatio-temporally correlated signals. Using super-resolution optical fluctuation imaging as a target, our approach not only significantly improves temporal resolution but also doubles the spatial resolution. We benchmark MISRGRU against the widely used U-Net architecture, evaluating performance in terms of resolution enhancement, training complexity, and inference latency using both synthetic data and real microtubule structures. After optimizing MISRGRU, we demonstrate a 400-fold reduction in computational latency compared to SOFI, highlighting its promise as an efficient, real-time solution for live-cell super-resolution imaging.